Slow Reveal

Notice, Wonder, Connect

Student Suggestions for Catchy Headlines: “The Lights of Nome,” “Dwindling Nightlight?,” and “Nome Your Time (Know Your Time).”

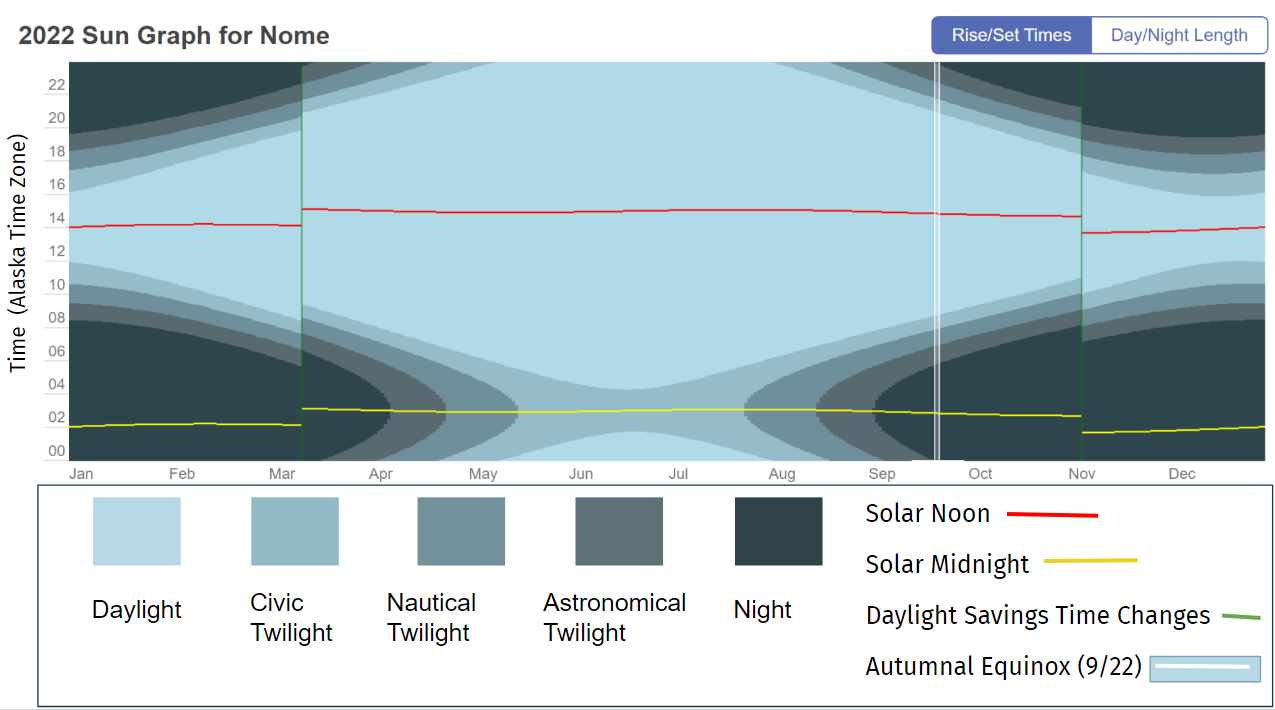

TimeandDate.com makes sun graphs of any location in the world. These graphs show the length and time of daylight, twilight and night over the course of 2022. We focus on Nome, Alaska, as a location to the north of the state and to the west within the Alaska Time Zone. How does light change over the year? How do we humans adjust our clocks to shape our connections with the natural world and with each other?

This sun graph depicts daylight, twilight, and night throughout 2022 for Nome, Alaska, using a 24 hour clock to show local times, not am and pm. The graph shows how skewed Nome’s clock time is from its solar time. Solar noon in Nome – when the sun is highest in the sky – happens at 14:00 (2:00 pm) in the winter and at 15:00 (3:00 pm) in the summer. Similarly, the darkest part of the night is at 2:00 or 3:00 in the morning, not at midnight.

The graph differentiates among the different types of twilight (specific definitions are in the slide deck). From mid-May through mid-August, the darkest that it gets in Nome is “Civic Twilight” when there is “still enough natural sunlight … that artificial light may not be required to carry out outdoor activities.” The graph also shows how switching over to Daylight Savings Time (in March) and back to Standard time (in November) shifts clock time an hour later and then earlier.

We chose Nome as the main graph because it’s close to the western limit of the Alaska Time Zone (-9 UTC) and so its solar time is particularly skewed. This additional slide shows how sun graphs differ within the Alaska Time Zone at 5 different locations in Alaska, and, for further comparison, Disneyland in California is included.

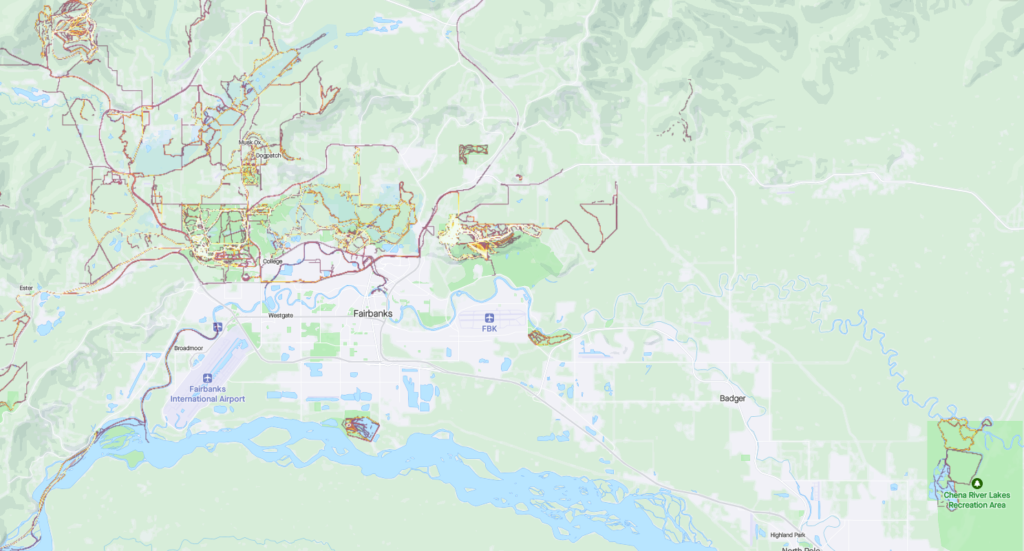

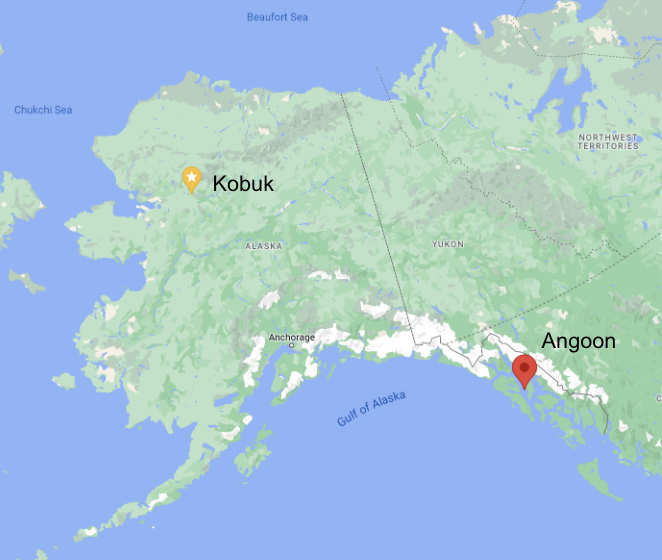

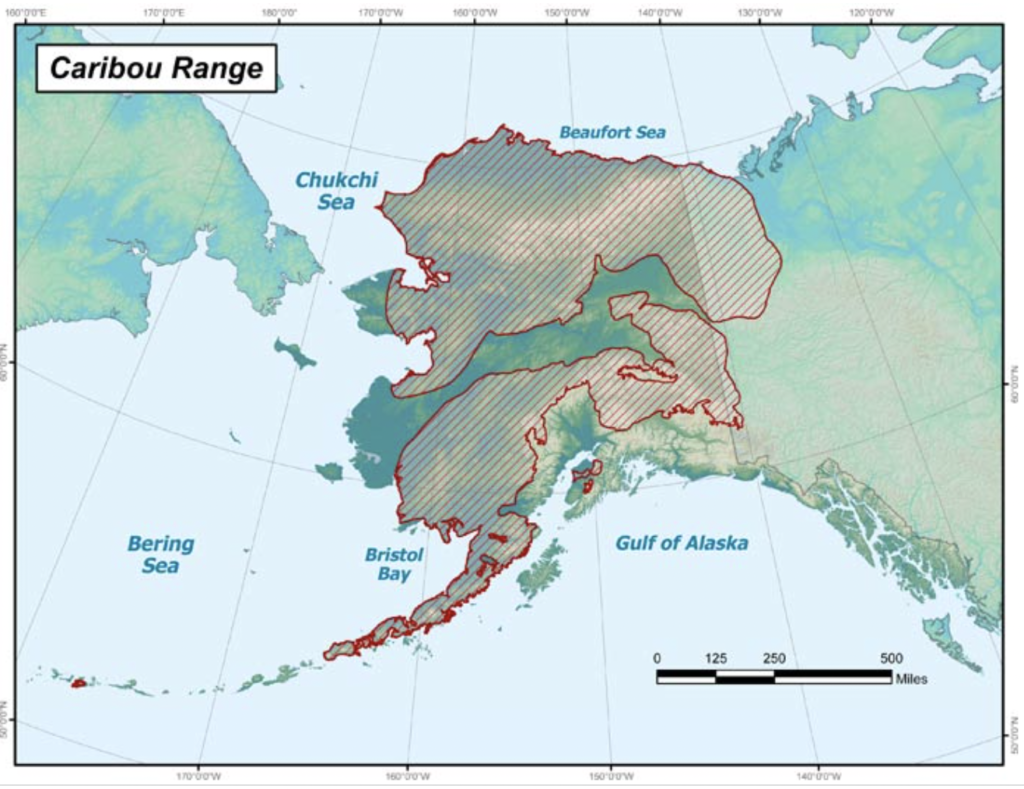

In looking at these sun graphs simultaneously, we can see that in Unalaska – which is about as far west as Nome – the solar time is equally skewed, but, because it’s further south, they experience astronomical twilight there in the summer months, when it’s dark enough for most celestial objects to be viewed. By contrast, Hyder, to the far east of ADT, experiences solar noon a little early (11:30 am) in the winter and a little late (12:30 pm). (Of course, variation within the hour before and after solar noon is to be expected.) Utqiagvik is in the most northern part of Alaska and its sun graph reflects that – no twilight at all in the summer months and no daylight at all in the winter months. However, Utqiagvik is not as far west as Nome, and its solar noon is less “off” (about 1:00 pm in the winter and 2:00 pm in the summer). The sun graphs of Juneau and Fairbanks reflect their respective locations. Disneyland, far to the south and closer to the equator, experiences much less variation in the amount of daylight each day and much shorter periods of twilight (note: it’s also in a different time zone).

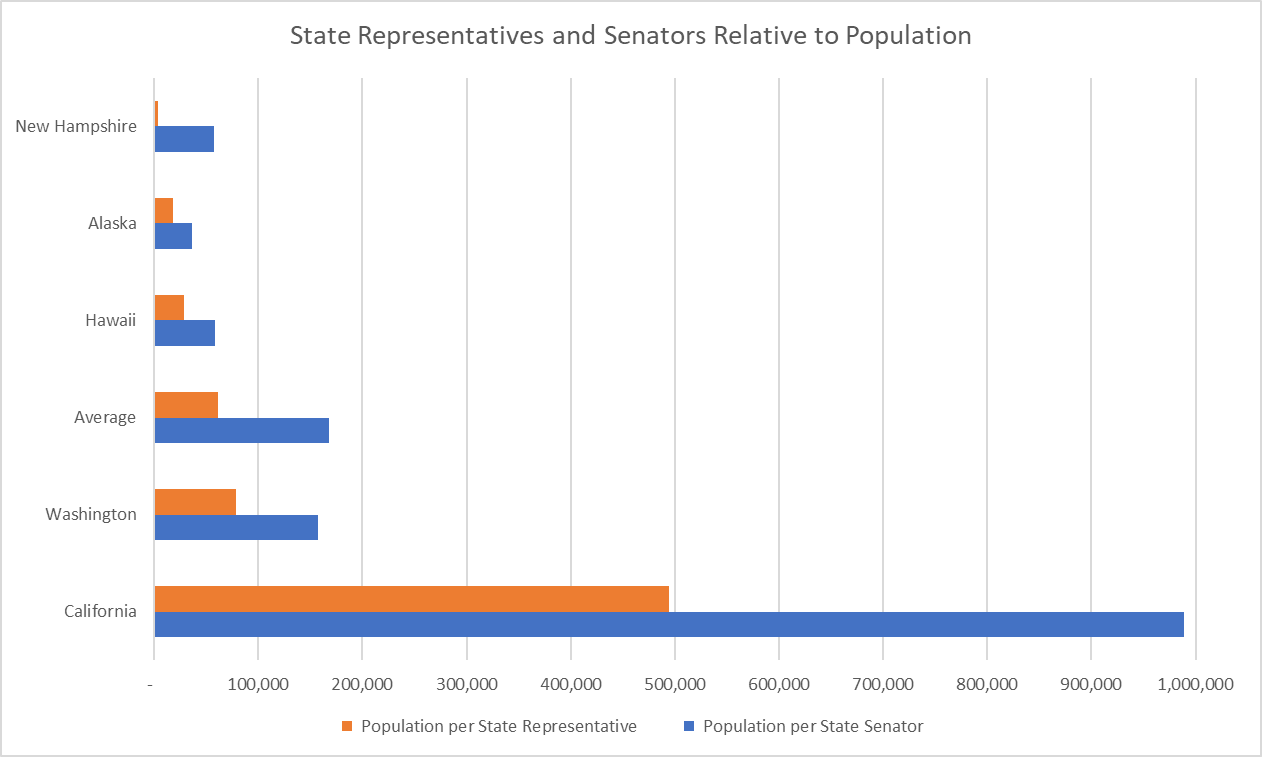

Time zones were initially figured out mathematically (by dividing the earth into 24 zones, one for each hour of the day, beginning in Greenwich, England, and then radiating out along longitudinal lines). They were then significantly adjusted to accommodate political boundaries and geographic landmarks. Since then, individual political entities (e.g., countries, states, and/or provinces) have been deciding for themselves how and where they want to adopt those time zones and during which part(s) of the year, for their particular boundaries. (Fig.2) Each time zone is described by how it relates (+ or -) to UTC (Universal Time Coordinated). Greenwich, England is 0 UTC.

The contiguous US spans 4 time zones – Eastern (-5 UTC), Central (-6 UTC), Mountain (-7 UTC), and Pacific (-8 UTC). Alaska, given that it is as wide as the contiguous US, also spans the equivalent of 4 time zones. Until as recently as 1983, all 4 time zones were used within Alaska as shown (more or less) in the diagram below Now, all of Alaska is either in Alaska Time (-9 UTC) or Hawaii-Aleutian Time (-10 UTC). The dividing line between time zones within Alaska is just west of Unalaska.

Deciding how to adapt clock time across broad geographic distances has been a complicated and often heated discussion for more than a century at many levels. For many years, each community set its own clocks according to the sun.

“In North America, a coalition of businessmen and scientists decided on time zones, and in 1883, U.S. and Canadian railroads adopted four (Eastern, Central, Mountain and Pacific) to streamline service. The shift was not universally well received. Evangelical Christians were among the strongest opponents, arguing “time came from God and railroads were not to mess with it,”…” (NYTimes)

Similarly, discussions about Standard vs Daylight Savings Time – whether to switch and, if so, which to keep permanent – have raged for decades in the US and elsewhere.

“To farmers, daylight saving time is a disruptive schedule foisted on them by the federal government; a popular myth even blamed them for its existence. To some parents, it’s a nuisance that can throw bedtime into chaos. To the people who run golf courses, gas stations and many retail businesses, it’s great.” (NYTimes)

Most recently, the U.S. Senate voted, in the spring of 2022, to stay on Daylight Savings Time permanently. That bill is currently stalled in the U.S. House. Both Alaska senators voted to make Daylight Savings Time permanent.

In Alaska, the challenges have revolved around how the choice of time zones might unify Alaska, force distant communities to adhere to clock times that adversely affect their daily lives, and/or might further connect or disconnect Alaska from the US West Coast, where much business has centered. During WWII, “Southeast Alaska was put on Pacific Time during World War II to synchronize the state capital with San Francisco and Seattle.” In 1983, when Alaska switched from 4 time zones to 2, some communities chose to stay on Pacific Time to be aligned with the banks and businesses in Seattle (Ketchikan) and to be aligned with the Bureau of Indian Affairs in Portland (Metlakatla). They have since switched over to Alaska Time.

Elsewhere, China, a country even wider than the state of Alaska and spanning 5 time zones, has chosen to keep the entire country on Beijing time since 1949. The Yukon Territory, in 2020, decided to stop switching from daylight to standard time. They are now permanently at -7 UTC. That means that in the summer, they are one hour ahead of Alaska (i.e., they are aligned with Pacific Daylight Time) and in the winter, they are two hours ahead of Alaska (i.e., they are aligned with Mountain Standard Time)

What do you think?

- How do daylight hours in Nome align similarly or differently from where you are? Why?

- How does Daylight Savings Time (March-November) impact you, if at all?

- Would you rather more daylight in the morning year-round (that’d be Standard Time, like now, late November) or would you prefer more daylight in the afternoon/evening year-round (that’d be Daylight Savings Time, like in summer and early fall)?

- If you were to choose either Daylight Savings Time or Standard Time to make permanent for the entire country, which would you choose and why? Who might have a different preference and why?

- If you were in charge of Time Zones for Alaska, how many would you choose? Which ones?

- Or, should we have Time Zones at all? Are there alternatives?

There’s lots of fascinating history behind the creation of and disagreements around Time Zones and Daylight Savings Time at world, national and state levels. We’ve included several very readable articles in resources; check them out.

Finally, one more note that may clear up some questions:

The Prime Meridian (0°longitude) and the Ante Meridian (180°longitude) “divide” the earth into the western and eastern hemispheres. Most of Alaska is east of the 180th meridian, but parts (e.g., Attu) are west of the 180th meridian; that means that Alaska is the state that is, technically (mathematically), both farthest west and farthest east! The International Date Line runs roughly along the 180th meridian, but because it’s a political construct, people have adjusted it to run west of (rather than through) Alaska and so all of Alaska (and the US) remain in the same date.

Additional Resources:

Visualization Type: Area Graph

Data Source: Time and Date

Visualization Source: Time and Date

It can easily be replicated. Go to the Time and Date and select the place that you want the sun graph of.